Most marketers associate AI with productivity hacks: a prompt here, a content draft there, maybe a chatbot on the website. That’s not what this article is about.

We’re moving beyond generative AI as a single-shot assistant. What’s shaping the next marketing frontier is the rise of autonomous AI agents—not tools that wait for your prompts, but systems that take goals, gather context, make decisions, and execute on your behalf.

These agents don’t just “generate.” They act. They can research trends, monitor campaigns, rewrite copy based on funnel data, segment audiences in real time, and push changes live. More importantly, they can handle multi-step workflows, operate across multiple platforms, and collaborate with other agents or team members. Think of them as your full-stack marketing analyst, campaign manager, and automation specialist rolled into one.

But deploying these agents isn’t plug-and-play. If you want to build something that’s actually useful—and doesn’t burn API credits hallucinating nonsense—you’ll need to understand how these systems are architected, how they use memory, how they interface with tools, and where the friction lies.

That’s what this article will cover. We’ll walk through the technical structure behind functional agents, explore frameworks that actually work in production, and break down five real-world agent workflows tailored to marketers. No generic “use AI for SEO” advice. Just practical, technical examples you can build or deploy with your current stack.

Let’s get into it.

Table of Contents

Architecture 101: what powers a marketing AI agent

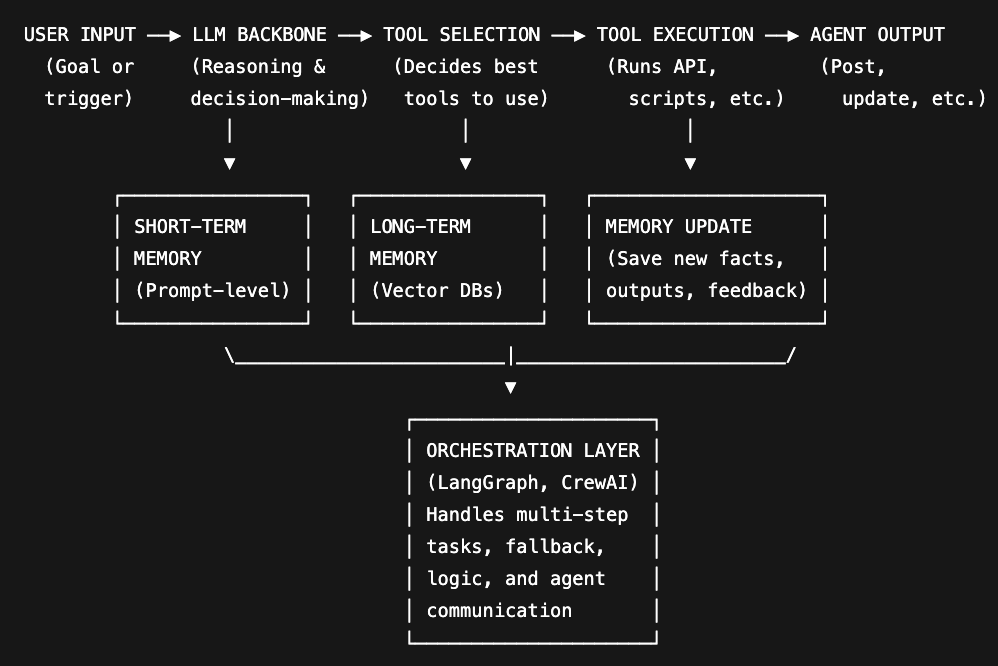

AI agents aren’t just glorified prompt wrappers. They’re systems built on top of three foundational components: reasoning (LLM), memory (for context), and tool use (for execution). Without all three, you don’t have an agent, you have a chatbot.

Let’s break this down into the parts that matter for marketers who want agents that actually do work.

1. LLM backbone: the brain

The large language model (LLM) is where decisions are made. It interprets instructions, plans actions, and determines next steps. Most marketers default to GPT-4, but that’s only one option. Claude, Mistral, and Gemini all offer different strengths: Claude handles long context well, Mistral is fast and lightweight, Gemini integrates natively with Google’s ecosystem.

The key is deterministic behavior. If your agent needs to complete multi-step tasks consistently (e.g. qualify leads, rewrite headlines, schedule them), you need a combination of:

- tightly scoped system prompts,

- few-shot examples,

- and constraints that avoid hallucinations.

This is also where most no-code “agents” fall short. Without true planning and tool routing capabilities, they become brittle and shallow.

2. Memory: short-term and long-term context

Agents need to remember things. Not just within a session, but over time.

There are typically two layers:

- Short-term memory: managed through context windows (e.g. token limits). Fine for simple sequences, but gets overwritten quickly.

- Long-term memory: external vector databases (e.g. Pinecone, Weaviate, Chroma) or structured note stores (e.g. Notion, Airtable) embedded with semantic search.

This enables your agent to recall past campaign performance, customer objections, brand tone examples, or product details—all without cramming it into every prompt.

Example: If your SEO agent researched “marketing AI agents” last week, it should remember that analysis before suggesting new keywords or writing a follow-up article.

3. Tools: where actions happen

Reasoning without execution is useless. Agents must interact with external systems:

- APIs (e.g. HubSpot, Semrush, CMS)

- Scrapers (e.g. Browserless, Puppeteer)

- Automations (e.g. Zapier, Make)

- Cloud functions for tasks like embedding generation, image creation, or deploying updates

Tool use is where autonomy becomes real. Your agent needs to know:

- when to use a tool,

- how to handle tool output,

- and how to chain results across multiple tools without breaking the workflow.

The best frameworks (LangGraph, CrewAI, AutoGen) give you a way to define toolkits and register them per agent or per task. This is what allows you to move from “generate this post” to “run this entire launch sequence.”

4. Orchestration: the invisible layer

You’ll often need multiple agents—or at least multiple roles—in a marketing workflow. Think strategist, writer, analyst, QA. That’s where orchestration frameworks come in.

Instead of building brittle chains of prompts, orchestration allows:

- agent-to-agent communication,

- task planning with forks and loops,

- context injection based on memory or feedback.

CrewAI and LangGraph are leading here. They let you define agents with roles, tools, goals, and constraints—then coordinate them with logic (if X fails, do Y).

This isn’t just for devs. With the right templates, you can deploy reusable agent playbooks across your marketing org.

- User input: “Track top competitor launches this month and draft summary”

- LLM: Interprets the task and breaks it into subtasks

- Tool selection: Chooses scraper for websites, summarization for press releases, CMS integration

- Execution: Runs scrapers, rewrites in brand tone, pushes summary to Notion

- Output: Task completed autonomously, with summary ready for review

Setup: how to deploy a marketing AI agent

If you want to move beyond prompt-based hacks and into true autonomy, you need more than ChatGPT and a spreadsheet. Deploying an AI agent that executes marketing tasks means assembling a modular stack: foundation model, memory, tool interface, and orchestration. You don’t need to build everything from scratch, but you do need to understand how the parts connect—and where the common failure points are.

Here’s a minimal but robust setup to get started:

1. Choose the right framework

For full agent behavior—planning, memory use, tool execution, fallbacks—you need an orchestration framework. The current leaders:

- LangGraph: Event-driven, stateful agent orchestration. Think of it as DAGs for LLM flows. Ideal if you need persistent state and conditional task routing.

- CrewAI: Role-based system. Each agent has a goal, tools, memory, and can collaborate with others. Easier to grasp and deploy quickly.

- AutoGen: Great for multi-agent collaboration. High control over communication loops and workflows. More verbose but flexible.

If you’re building autonomous workflows with clear task boundaries (e.g. research → draft → schedule), CrewAI is often the fastest to get working.

2. Define your agent(s)

Each agent should have:

- A role: What’s its job? (e.g. SEO analyst, email optimizer, QA reviewer)

- A goal: What outcome does it work toward?

- A set of tools: What APIs, scripts, or external apps can it call?

- Access to memory: What does it need to remember between runs?

3. Connect your tools

Most marketers already use tools like:

- CMS (WordPress, Webflow)

- CRM (HubSpot, Pipedrive)

- SEO platforms (Ahrefs, Semrush)

- Email platforms (ActiveCampaign, Mailchimp)

Wrap these in APIs or call them via automation platforms like Zapier or Make. Then expose them to your agent through a tool registration layer.

If you’re not writing custom tool wrappers, use LangChain’s or CrewAI’s Tool class to wrap simple API calls.

4. Set up persistent memory

You need long-term memory if your agent is going to learn from past actions or hold state across workflows.

Options:

- Vector DB: Pinecone, Weaviate, Chroma

- Embeddings: Use OpenAI or Cohere to embed inputs and outputs

- Structured store: Notion or Airtable with embedding + search

This memory should include:

- Past campaign summaries

- Brand tone and style examples

- Prior tool outputs

- Feedback loops (what worked, what didn’t)

5. Trigger the agent

You can run agents manually or hook them into triggers:

- Webhooks: Trigger when new lead comes in, or traffic drops

- Scheduled runs: Daily SEO monitoring or weekly content review

- Slack commands: “/run-agent seo_report”

This is where the agent stops being a toy and becomes a background operator—executing tasks while your team focuses on strategy.

6. Review + iterate

Even the best agents need calibration:

- Log everything: Inputs, outputs, tool usage, memory retrievals

- Validate: Set up confidence thresholds or include QA agents

- Retrain or re-prompt: Adjust based on failure cases or drift

Think of your agent as a junior team member. The first week will be rough. After 20 iterations, it’ll start outperforming basic workflows.

Real-world workflows: 5 agent playbooks for marketers

Most of what’s written about AI in marketing still lives at the “generate ideas” level. That’s not what you need. You need agents that do actual work across your stack — researching, executing, adapting in real time. These five workflows go beyond novelty and into high-leverage automation.

1. SEO scout agent

Goal: Identify high-potential keywords weekly, generate content briefs, and push them to the content pipeline.

Components:

- Tools: Semrush API, Google Trends, internal analytics (GA4 or Amplitude), Notion or Airtable for storage

- LLM Backbone: GPT-4 or Claude Opus

- Memory: Stores previous keyword wins, published articles, cluster mapping

Flow:

- Query Semrush for new keywords in target clusters

- Cross-check against internal performance data

- Eliminate duplicates or cannibalization risks

- Auto-generate content briefs with outline + intent tags

- Push briefs to Notion for team review

Bonus: Add agent-based QA to check if briefs match the brand’s tone and business goals.

2. Lifecycle email optimizer

Goal: Monitor user behavior, identify drop-offs in onboarding or upgrade flows, and auto-suggest improved email variants.

Components:

- Tools: Segment/Amplitude, Email API (ActiveCampaign, Mailchimp), internal copybank, A/B testing platform

- LLM Backbone: GPT-4 with few-shot prompt examples

- Orchestration: CrewAI with feedback loop agent

Flow:

- Detect conversion drop between onboarding steps

- Identify the responsible email(s)

- Retrieve tone-validated email examples

- Generate alternative subject lines and CTAs

- Deploy A/B test via email API and monitor CTR/deliverability

Optional extension: Add agent logic for interpreting test results and triggering rollouts automatically when lift > X%.

3. Competitive content cloner

Goal: Monitor top competitors’ content across LinkedIn and blog, extract performing topics, repurpose into your brand’s tone with updated angle.

Components:

- Tools: Browserless (headless scraper), LinkedIn API, internal tone training data, CMS API

- LLM Backbone: GPT-4 with fine-tuned system prompt

- Memory: Stores used competitor content to avoid duplication

Flow:

- Scrape LinkedIn posts and blogs from predefined competitors weekly

- Use engagement metrics (likes/comments) to rank top content

- Extract core ideas and transform into content in your brand voice

- Auto-schedule drafts to your CMS or Buffer for approval

Safety net: Include an agent-based fact-check pass to catch hallucinations or off-brand phrasing before publishing.

4. Product Hunt launch agent

Goal: Coordinate copywriting, asset preparation, and launch scheduling for Product Hunt or similar platforms.

Components:

- Tools: Notion or Trello (task tracking), CMS, Buffer/X, PH API

- Agents: Copywriter, image checker, campaign coordinator

Flow:

- Create internal tasks with due dates (launch timeline)

- Generate Product Hunt tagline, description, and founder’s comment

- Draft tweets and emails for launch week

- Verify asset specs (thumbnail, banner)

- Schedule all assets + notify team of Go Live checklist

Useful add-on: Integration with Slack to handle feedback loops pre-launch with an “Approve / Revise” mechanism.

5. Lead qualification agent

Goal: Process inbound demo or trial requests, qualify based on ICP rules, and write CRM notes with personalization.

Components:

- Tools: CRM API (HubSpot, Salesforce), LLM with structured system prompt, third-party firmographic enrichment (Clearbit, Apollo)

- Memory: Keeps track of common rejection patterns

Flow:

- Ingest form submission or meeting request

- Enrich data via API (company size, revenue, location)

- Score against ICP rules

- Generate CRM notes with summary + suggested outreach angle

- If disqualified, add to newsletter list with reasoning saved for analytics

Smart variant: Let the agent recommend different follow-up playbooks (e.g. send case study, offer audit, refer to self-serve plan).

Each of these agents represents a compound workflow: input, enrichment, decision, execution. They’re not just helpers — they’re process owners. They turn what used to be hours of manual switching between tools into automated loops that adapt to results.

Where most AI agents break: challenges to avoid

Most AI agents fail not because the model is wrong—but because the system is fragile, misaligned, or poorly scoped. If you’re deploying agents in production workflows, you’ll run into these problems fast unless you plan for them.

Here’s where most setups break, and how to avoid wasting time with agents that don’t deliver.

1. Lack of scoped reasoning

The default GPT-4 system prompt is not a strategy. If you let the LLM “decide” too much without constraints, you get inconsistency, hallucinations, or irrelevant output. The issue isn’t the model—it’s overdelegation.

What to do instead:

- Use clearly defined agent goals (“Summarize product feedback, highlight churn risks”).

- Include specific constraints (“Don’t suggest pricing changes; only summarize user concerns”).

- Use examples and rejection criteria to guide outputs (few-shot prompting + validation patterns).

2. Fragile tool usage

Calling tools is easy. Handling what they return is harder. Most agent failures happen when:

- API responses are unpredictable

- Data formats change

- A tool fails and there’s no fallback

Solution:

- Wrap every tool call with input/output validation

- Use try/catch patterns in orchestrators

- Build fallbacks (e.g. “if Semrush API fails, fetch cached keyword data from memory”)

LangGraph is particularly strong here—it lets you define conditional transitions and fallbacks per tool node.

3. Over-reliance on short-term context

If your agent doesn’t have persistent memory, it will behave like it has amnesia. Every run will feel like starting over, making it useless for iterative workflows or compounding learning.

Fix:

- Connect agents to a vector DB (Chroma, Weaviate, Pinecone) or an embedded Notion/Airtable system

- Store past inputs/outputs, decisions, and performance metrics

- Use similarity search to inject relevant historical context into new runs

This is essential for agents doing campaign analysis, content generation, or anything that involves continuity over time.

4. No output verification

One of the most common mistakes: trusting the first output. Even great LLMs make basic logic errors, especially with numbers, names, and internal logic across steps.

Fix:

- Build QA agents into the workflow. One writes, the other critiques.

- Use assertion checks: “Did the agent mention the product name? Is the CTA present?”

- Use embedding similarity to compare new outputs against previously successful examples

Think of it as peer review for AI. Don’t skip it just because the model sounds confident.

5. Poor trigger design

If your agent only runs on manual prompts, it’s a tool. To act like a team member, it needs to run when work needs to happen—not when someone remembers to prompt it.

Best practices:

- Use webhooks to trigger on data (new lead, traffic spike, cart abandonment)

- Schedule recurring runs (e.g. “Monday 9 AM, update SEO report”)

- Use event streams from your product, CRM, or analytics stack

And always log what the agent did. Treat it like a human teammate you want visibility into.

6. Misaligned expectations

An AI agent can automate decisions. It can’t create business strategy from scratch. A lot of failed experiments come from trying to assign agents vague goals like “help us improve growth.”

Counter this by:

- Scoping agents tightly. Give them specific, measurable outputs.

- Starting small. One narrow, repeatable task done well beats a failed generalist agent.

- Layering autonomy over time. Move from suggestion → execution → optimization loops.

These systems work. But only if you treat them like systems—not like magic.

Pathmonk as the orchestrator: bridging intent with automation

In the AI ecosystem, most tools operate in silos. One generates content. Another triggers an email. A third tracks clicks. What’s missing is an orchestrator—something that understands what your visitors are actually doing and routes that intelligence into action.

Dear marketer, meet Pathmonk 😎

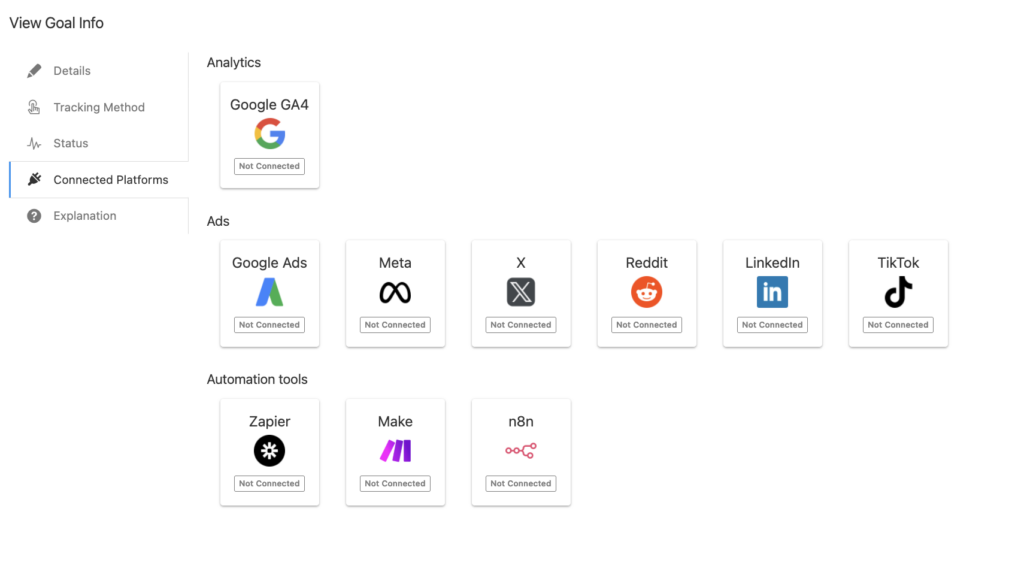

It’s not just another data layer. Pathmonk functions as both a real-time decision engine on your website and a precision signal emitter across your stack. And because it connects natively with automation tools like n8n, Zapier, or Make, it becomes the central intelligence layer in your AI-driven marketing workflows.

1. Adaptive agent: your website personalizes itself on intent

Pathmonk detects the signals most tools ignore: scroll velocity, revisit frequency, form hesitation, bounce behavior by source. This behavioral layer powers real-time personalization without relying on user input or third-party cookies.

Here’s what that means:

- Show different micro-experiences based on journey stage (awareness vs. consideration)

- Trigger on-site changes without any dev work

- Push tailored content, CTAs, or lead magnets depending on behavioral probability scores

This happens while the user is still on the site—autonomous, intent-aware adaptation in action.

Example use case: A B2B cybersecurity company uses Pathmonk to segment visitors into awareness, mid-funnel, and decision-stage groups in real time. A first-time visitor from a G2 referral sees analyst validation. A returning visitor who’s viewed pricing twice gets a testimonial-driven experience. No user input needed. All mapped via micro-behavior signals.

2. Automation hub: pipe intelligence into your automated workflows

The real power shows up when Pathmonk doesn’t just personalize the website—but triggers external workflows based on what it learns.

Thanks to its integrations with tools like n8n or Zapier, Pathmonk becomes your signal source—sending conversion data, behavioral thresholds, and predictive intent to the rest of your stack.

This enables automation chains that are proactive, not reactive. Here are some advanced use cases (built with n8n):

→ High-intent lead follow-up with dynamic enrichment and routing

Trigger: A visitor submits a demo request form after interacting with key decision-stage content (e.g. pricing, product explainer, testimonials).

Pathmonk emits:

- Conversion event

- Visitor intent score

- Session behavior breakdown (e.g. entry source, touchpoints, friction signals)

n8n flow:

- Catch webhook from Pathmonk with full lead + behavioral data

- Enrich contact using Clearbit or Apollo

→ Get company size, funding, industry, tech stack - Run lead scoring logic

→ Decision-tree evaluates sales-readiness (e.g. is ICP + enterprise-tier?) - Dynamic routing

- If enterprise-fit: send Slack alert to AE with summary and urgency level

- If mid-market: create deal in HubSpot with pre-filled notes and auto-assign to SDR

- If not in ICP: trigger nurture email sequence and add to marketing list

- Generate personalized follow-up email using GPT

→ Includes custom value prop based on industry + visited pages - Log entire flow to Notion CRM dashboard for marketing attribution review

Increase +180%

leads

demos

sales

bookings

from your website with AI

Get more conversions from your existing website traffic delivering personalized experiences.

→ Anonymous company visit → ABM campaign trigger

Trigger: A company visits your website and consumes mid-to-bottom funnel content, but no user submits a form.

Pathmonk emits:

- Company name

- Company domain

- Visit timestamp

- Source type (e.g. organic, direct)

- Country/location of visit

n8n flow:

- Webhook receives company-level visit signal from Pathmonk via Zapier

→ Example: Halliburton, sheraton.com, location: UAE, source: organic - Run real-time enrichment using Clearbit or Apollo

→ Input: company domain

→ Output: company industry, size, tech stack, revenue, employee list - Filter and match against ABM criteria

→ Example: Only proceed if company = ICP, revenue > $10M, tech = match - Fetch key decision-makers at that company

→ Roles: VP Marketing, Head of Growth, Digital Strategy Director

→ Region: same as visit geo if available - Create ABM contact list

- Push contacts into LinkedIn Campaign Manager audience

- Create tailored Meta or X audience

- Tag in CRM as “Anonymous visitor – high-fit company”

- Generate outreach assets using GPT

→ Ad copy tailored to company industry and likely intent (inferred from source/page path)

→ Optional: generate outbound email draft for SDR outreach - Send internal Slack alert

- Include company, visit context, contact list, campaign angle

- Option for sales to assign and add to manual outreach

- Log all actions in CRM or Notion

→ Track company journey even if no one fills out a form

This automation uses Pathmonk to surface otherwise invisible demand. The key insight is that while you don’t know who visited, you know which company showed intent—and can build an entire outbound motion from there.

The real advantage: it’s not passive data

Most tools hand over reports. Pathmonk hands over qualified decisions. It understands what happened, assigns context, and pushes signals into workflows that operate across channels.

With n8n handling the orchestration, you can build modular playbooks triggered by live intent—without waiting for form fills, CRM enrichment, or campaign rules.

Pathmonk becomes the real-time interpreter. n8n becomes the executor.

That’s what AI-enabled marketing workflows look like when you stop reacting to data and start acting on behavior.

FAQs on AI agents for marketing automation

1. What’s the difference between an AI agent and traditional marketing automation?

Traditional marketing automation systems operate on if-this-then-that logic: predefined rules, linear workflows, and static triggers. They’re reactive by design. You configure them to send an email when someone fills out a form, or trigger a retargeting campaign when a user lands on a specific URL. While this is useful, it’s rigid. The system can’t evaluate why something happened, what the optimal next step might be, or adapt the message based on changing intent in real time.

AI agents, on the other hand, are autonomous systems that reason through multiple steps and select actions dynamically. They don’t just react—they interpret. Using LLMs (like GPT-4), memory systems, and access to tools or APIs, they can make decisions mid-flow. For instance, an AI agent monitoring user behavior might not only detect drop-off on a demo page but generate three alternate outreach angles, test them via email, and adjust the strategy based on engagement—without human intervention. It’s not just automation. It’s active orchestration based on reasoning, feedback, and dynamic tool use.

2. How do AI agents maintain context across workflows?

Maintaining context is one of the biggest challenges in building functional AI agents—especially in marketing, where interactions are fragmented across sessions, devices, and platforms. AI agents maintain short-term context through the LLM’s token window, which lets them “remember” inputs and outputs within a single task. But that’s not enough. For cross-session memory, agents need external memory layers, such as vector databases (e.g. Pinecone, Chroma, Weaviate) or embedded structured stores like Notion or Airtable.

These external memory layers let agents store and retrieve semantically relevant information across tasks and time. For example, an agent optimizing email performance could store past campaign headlines, engagement metrics, and rejection patterns. On the next run, it can fetch high-performing subject lines and avoid previously flagged phrases. In multi-agent environments, memory enables collaboration: the strategist agent can hand contextual insights to the copywriter agent, and so on. Without this persistent memory architecture, agents will repeat themselves, lose nuance, or start from zero every time—which limits their usefulness in real-world marketing ops.

3. Which orchestration frameworks are best for deploying AI agents in production?

Several orchestration frameworks have emerged to help developers and marketers deploy AI agents with structured behavior, but the best one depends on your use case. LangGraph is built for complex, stateful workflows. It treats tasks as directed graphs, where each node represents a step, tool call, or agent decision. It’s especially powerful for handling fallback logic, conditional flows, and parallel executions—ideal if you need persistent agents that adapt based on user behavior or campaign performance over time.

CrewAI, by contrast, is focused on multi-agent collaboration. Each agent in a CrewAI system has a defined role (e.g. SEO strategist, copywriter, analyst), access to specific tools, and can interact with other agents via structured message-passing. This makes it ideal for marketers deploying collaborative flows where one task branches into specialized subtasks. For example, a top-funnel content agent might pass leads to a nurture agent or flag edge cases for a QA reviewer agent. If you’re looking for fast deployment and human-like coordination logic, CrewAI is a solid choice. Meanwhile, AutoGen is best suited for scenarios requiring granular communication between agents with less orchestration overhead—but it’s more developer-heavy. Most marketing teams starting out will benefit from CrewAI’s simplicity, then scale into LangGraph as workflows mature.

4. How can Pathmonk enhance AI agent performance beyond website personalization?

Pathmonk’s role extends far beyond on-site experience adjustments. While it’s often framed as a personalization engine, its core value lies in being an intent signal layer. It interprets how users interact with your site—what pages they view, how they navigate, where they hesitate—and classifies their journey stage and intent level. This structured signal output (conversion event, engagement pattern, traffic source, etc.) can be used to trigger external workflows managed by AI agents. Instead of relying on isolated user actions (e.g. form fills), agents can respond to inferred intent, giving them earlier and more reliable activation points.

For example, an AI agent tasked with campaign optimization might use Pathmonk data to segment visitors not just by traffic source, but by predicted conversion probability. If a visitor shows mid-funnel behavior but doesn’t convert, the agent can personalize retargeting copy differently than it would for a top-funnel user. More advanced use cases include enriching CRM entries with behavioral classifications from Pathmonk, feeding AI agents with user timelines for sales intel, or even retraining content generation agents based on shifting engagement heatmaps. Pathmonk becomes the behavioral lens through which agents see and interpret opportunity—beyond static CRM fields or demographic tags.

5. What’s the best way to test and QA AI agent outputs in marketing workflows?

Testing AI agent behavior requires a multi-layered approach—especially in marketing, where subjective quality (e.g. copy tone, positioning accuracy) intersects with business logic (e.g. segment rules, ICP compliance). The first step is to separate testing into syntactic validation and semantic evaluation. Syntactic validation ensures agents are producing outputs in the correct structure: subject lines under 50 characters, emails with a CTA, JSON objects with required fields. This part can be automated through hardcoded checks or lightweight QA agents that evaluate structure and formatting.

Semantic evaluation is more complex. It involves assessing whether the output aligns with brand tone, strategic goals, or performance benchmarks. One approach is to implement shadow mode testing, where agents produce outputs that are logged but not deployed. Human reviewers or second-layer agents can score these outputs against historical performance or brand guidelines. A/B testing can also be extended to AI-generated outputs by routing only a subset of traffic through the agent while keeping a control group in place. Over time, embedding-based similarity scoring can flag outputs that deviate from known high performers. Combined, these approaches ensure AI agents improve over time without risking campaign integrity.

6. How does agent-to-agent communication work in marketing environments?

Agent-to-agent communication is central to building scalable, modular systems—especially in marketing workflows that involve layered tasks like planning, content creation, analysis, and QA. The typical setup involves each agent having a defined role, input schema, and expected output format. Communication happens via structured messages passed between agents. For instance, a “content strategist” agent might generate a topic cluster and pass it to a “copywriting” agent, which produces headlines and hooks. That output could then go to a “compliance” or “brand voice” agent for validation before reaching the CMS.

This communication can be synchronous or asynchronous, depending on the orchestration framework. In CrewAI, agent interactions are often prompt-based with embedded tools, while in LangGraph or AutoGen, you can define message schemas, decision forks, and error-handling logic. Practical marketing applications include agents that analyze funnel drop-offs, pass suggestions to campaign managers, and flag outliers to creative agents. What makes this powerful is that you don’t need to write monolithic agents that do everything—each agent can specialize, evolve independently, and be reused across workflows. The system becomes more composable, easier to debug, and more reliable under scale.