Too many businesses focus their marketing efforts solely on customer acquisition. However, if you don’t invest in optimizing your conversion rate, it’s akin to throwing sand into the ocean. You will be spending your time, budget, and effort without moving the revenue needle.

So, what exactly entails starting with CRO? Are we just changing the website colors, tweaking images, or altering messages? *shivers in marketing confusion* 😅

Hang in there. While these elements can play a role, CRO is much more comprehensive. It involves understanding user behavior, testing hypotheses, and making data-driven decisions that impact the entire customer journey funnel.

And it all starts with some good old testing!

Table of Contents

What Is CRO Testing?

Simply speaking, CRO testing is a structured and systematic approach to improving the performance of a website or digital product by increasing the percentage of visitors who take a desired action.

The act of testing in CRO involves creating hypotheses based on insights or data, designing controlled experiments, and then analyzing the results to determine the most effective changes that enhance user experience and drive conversions.

The key aspects of this type of testing include:

- Creating hypotheses: The first step in CRO testing involves identifying areas of potential improvement and formulating hypotheses. This might stem from observed user behavior, feedback, or performance data. For example, if data shows a high bounce rate on a landing page, a hypothesis might be that simplifying the page layout will improve conversions.

- Designing experiments: Next, controlled experiments are designed to test these hypotheses. The most common form of CRO testing is A/B testing, where two versions of a page or element are compared to see which performs better. In A/B testing, for example, a business might test two different call-to-action buttons to determine which one drives more clicks.

- Analyzing results: Once the experiment is complete, the results are analyzed to assess the impact of the changes. Key metrics like conversion rate, bounce rate, and time on page are evaluated to determine which variation performed better. If the results are statistically significant, the winning variation can then be implemented as a permanent change.

- Iterating and improving: CRO testing is an ongoing process of iteration and improvement. After implementing a successful change, businesses continue to test and optimize to further enhance their conversion rates.

We will delve deeper into these steps, but for now just keep this framework in mind.

Why Should I Be Doing CRO Testing?

Optimizing your conversion rate is crucial for businesses because it enables them to maximize the value of their existing traffic. Rather than spending more on customer acquisition, CRO allows businesses to enhance their user experience and increase the likelihood of achieving their desired outcomes.

By focusing on data-driven experimentation, a good CRO approach will help businesses to:

- Boost revenue: Even small improvements in conversion rates can lead to significant increases in revenue, especially for high-traffic websites or high-ticket products.

- Improve user experience: By identifying and eliminating friction points in the user journey, you will enhance overall user satisfaction and engagement.

- Maximize ROI: CRO ensures that businesses get the most out of their marketing investments by improving the effectiveness of their digital channels.

Now, it’s time to dive into the interesting part 😉

7 Steps to Create a CRO Testing Strategy

1. Identify Growth Goals and Objectives

The first step in creating a solid CRO strategy is identifying clear objectives that will bring growth to your business. This involves defining specific, measurable outcomes you want to achieve. For example:

- Increase sales: A specific objective could be to increase the conversion rate on product pages by 10% within the next quarter.

- Improve lead generation: An objective might be to boost newsletter sign-ups by 15% over the next month.

- Enhance user engagement: An objective could be to increase the average time on site for new visitors by 20%.

2. Analyze User Behavior to Identify Areas for Improvement

Once you’ve set clear growth goals and objectives, the next step in creating a CRO strategy is to analyze user behavior on your website or application. This step is probably the one that takes the longest, but it is essential to identify areas that could affect your ability to achieve the established goals.

Oh, and if you think you know your customers, think again.

To understand how visitors interact with your site, you’ll need to collect data from various sources. Key sources include:

- Web analytics: Use platforms like Google Analytics to monitor metrics such as bounce rate, average session duration, and conversion paths.

- Heatmaps: Tools like Hotjar or Crazy Egg allow you to visualize where users are clicking, scrolling, or hovering on your pages.

- Session recordings: Review session recordings to observe how users navigate your site and identify usability issues.

- User feedback: Gather feedback through surveys, feedback forms, or customer support interactions to identify pain points and unmet expectations.

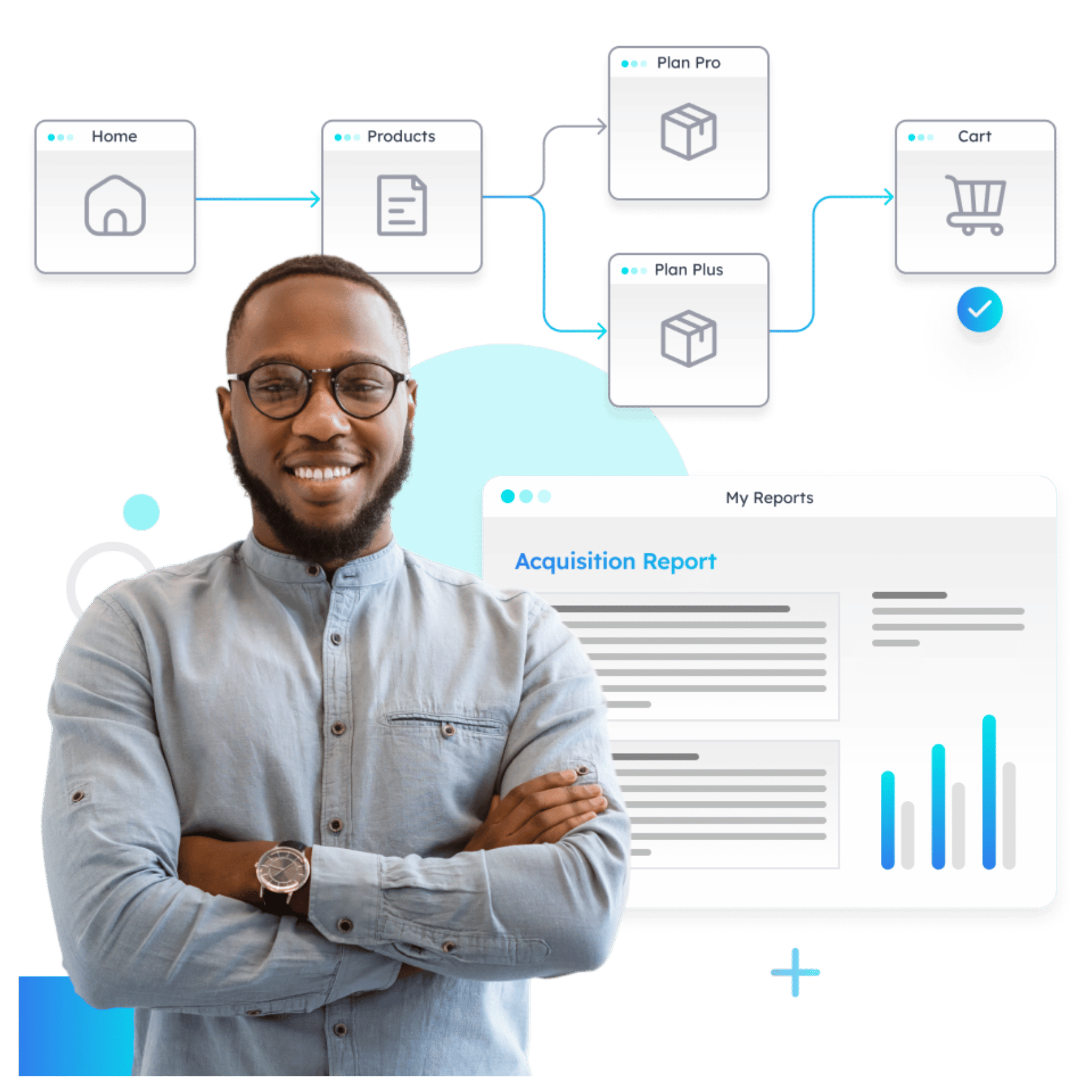

Alternatively, you can use a comprehensive tool like Pathmonk Intelligence, which offers a holistic and cookieless approach to analyzing user behavior. Pathmonk Intelligence combines the functionalities of web analytics, heatmaps, and session recordings, while also providing advanced insights such as:

- Predictive analytics: Pathmonk Intelligence uses AI-driven insights to predict user behavior and potential conversion outcomes.

- Actionable recommendations: The platform provides tailored suggestions for improving conversions based on user interactions and behavior.

- Intuitive interface: Pathmonk Intelligence features a user-friendly, intuitive interface that makes it easy for anyone to analyze user behavior effectively.

By leveraging a platform like Pathmonk Intelligence, you can streamline your analysis process and gain deeper insights into user behavior, helping you to identify key areas for improvement more effectively. If you like how it sounds, you can try our free demo.

Understand your customer journey analytics

See how your users behave, find drop-offs, and receive actionable insights with AI.

Focus your analysis on the pages most critical to your conversion goals. These will include:

- Landing pages: Often the first interaction point for users, these pages should provide a clear value proposition and encourage further engagement.

- Product pages: For e-commerce sites, these pages are critical for conversion. Analyze how users interact with product information and call-to-action buttons.

- Checkout or signup pages: These pivotal pages should have minimal barriers to completion, such as complex forms or unexpected costs.

- Content pages: For sites focused on content marketing, analyze user engagement with articles or blog posts to identify opportunities for improvement.

When analyzing these pages, focus on the following areas to uncover areas for improvement:

- Conversion funnels: Identify where users drop off in the conversion funnel to pinpoint sources of friction.

- Click-Through Rates: Evaluate the effectiveness of call-to-action buttons, links, and other interactive elements.

- Scroll depth: Use scroll maps to see how far users scroll on key pages, indicating potential issues with page layout or content structure.

- User journeys: Map out common user journeys to understand how visitors navigate your site, identifying inefficient paths.

Finally, based on your analysis, you will get be able to identify specific areas for improvement, such as:

💡 Improving navigation: Simplifying menus or adding clear calls to action to guide users.

💡 Enhancing page layout: Reorganizing content or using visual elements to highlight key information.

💡 Optimizing forms: Reducing form fields or improving form layout to increase completion rates.

💡 Improving content: Enhancing product descriptions, adding testimonials, or clarifying information to address user concerns.

💡 Improving page speed: Identifying and resolving performance issues affecting user experience.

If you made it to the end of this step, congratulations. By thoroughly analyzing user behavior and identifying areas for improvement, you’ll establish a solid foundation for developing effective hypotheses and conducting meaningful CRO tests.

3. Develop Hypotheses

With goals in place and a profound understanding of what’s happening on your website, the next step is to develop hypotheses. A well-constructed hypothesis provides a focused approach to testing and ultimately leads to actionable insights for optimizing conversions.

When crafting a hypothesis, keep the following tips in mind to ensure it is effective and actionable:

- Be specific: Clearly define the change you want to make and what you expect to happen. Avoid vague language.

- Be measurable: Include a specific metric that you can use to evaluate the outcome of the test. This could be a conversion rate, click-through rate, form completion rate, or any other relevant metric.

- Be relevant: Ensure your hypothesis aligns with your overarching business goals and objectives. The changes you test should contribute to achieving these goals.

- Be testable: Make sure your hypothesis can be tested through experimentation. If it’s not possible to isolate the variable you’re testing, the hypothesis may be too broad.

Here are a few examples of strong hypotheses that follow these guidelines:

Problem Statement 1: The bounce rate on the product page is higher than desired.

- Proposed solution: Adding customer testimonials to the product page.

- Expected outcome: The conversion rate on the product page will increase by 5%.

Problem Statement 2: The sign-up form completion rate is lower than expected.

- Proposed solution: Reducing the number of form fields on the sign-up page.

- Expected outcome: The form completion rate will increase by 20%.

Problem Statement 3: The average session duration for new visitors is lower than targeted.

- Proposed solution: Improving the clarity of the homepage’s value proposition.

- Expected outcome: The average time on site for new visitors will increase by 15%.

4. Prioritize Testing Opportunities

After brainstorming a list of potential hypotheses, it’s important to prioritize testing opportunities. This involves evaluating the potential impact and feasibility of each test.

Consider the following criteria:

- Impact: Estimate the potential improvement a successful test could bring. For example, if the product page accounts for a large percentage of sales, testing there could have a significant impact.

- Effort: Assess the resources required to implement the test. Simple changes, like altering button text, typically require less effort than complex changes, like redesigning a checkout process.

- Risk: Evaluate the potential downside of a test. For example, significant changes to key pages might carry higher risk if they negatively impact conversions.

Prioritizing tests ensures you focus on the most valuable opportunities first.

If you need help with this step, you can use our CRO Testing Prioritization Framework (free template).

Free template: CRO testing framework

Organize, prioritize, and execute conversion rate optimization tests with our framework.

5. Choose a Testing Method

We’re getting to the fun part. Time to select a testing method, define how you will implement your experiments, and evaluate their outcomes.

The choice of testing method depends on various factors, including the type of hypothesis, the traffic volume of your website, and the complexity of the changes you want to test.

Here are the most common testing methods used in CRO:

A/B Testing

A/B testing, also known as split testing, involves comparing two versions of a page or element to see which performs better. In an A/B test, traffic is split between the two versions, and their performance is measured based on a predefined metric. A/B testing is straightforward, making it ideal for testing specific changes, such as changing a headline or call-to-action button.

- When to use: A/B testing is suitable for small, isolated changes and when you have sufficient traffic to generate statistically significant results.

- Example: Testing two versions of a landing page with different headlines to see which one increases conversion rates.

Multivariate Testing

Multivariate testing allows you to test multiple changes simultaneously to understand how they interact with each other. This method involves testing different combinations of elements, providing insights into which combination yields the best result. Multivariate testing is more complex than A/B testing but offers more comprehensive insights.

- When to use: Multivariate testing is suitable when you want to test multiple elements on a page and when you have high traffic volume.

- Example: Testing different combinations of headlines, images, and call-to-action buttons on a product page to see which combination increases sales.

Split URL Testing

Split URL testing, similar to A/B testing, involves testing two different versions of a page. However, instead of changing elements on the same URL, each version is hosted on a different URL. This method is useful for testing more significant changes, such as a complete redesign.

- When to use: Split URL testing is ideal for testing significant changes, such as new layouts or redesigned pages, and when you want to compare entirely different versions of a page.

- Example: Testing two different versions of a homepage to see which one attracts more visitors.

6. Define Statistical Significance

Before launching any CRO test, it’s crucial to define what statistical significance means for your specific experiment. Statistical significance helps determine whether the results of a test are due to the changes made or merely due to random chance.

And now, a bit of math:

- First, choose a confidence level: The confidence level is the probability that the results of your experiment are accurate. A 95% confidence level is standard, meaning you are 95% confident that the results are not due to chance. This corresponds to a p-value of 0.05.

- Determine the minimum effect size: The effect size is the minimum change between your test variants that you consider meaningful for your business. It’s essential to decide this in advance because it influences the required sample size. The effect size could be a specific percentage increase in conversion rate, a reduction in bounce rate, or any other metric critical to your test.

- Finally, calculate the required sample size: Based on your chosen confidence level and minimum effect size, calculate the required sample size for your test. This ensures that the test has enough data to reliably detect a change if one exists. Several online calculators and statistical software can help perform this calculation by inputting your current conversion rate, desired effect size, and confidence level. We recommend this AB test calculator by CXL but there are many others you can use.

- Do not forget to plan for variability: Accept that there’s inherent variability in any test, influenced by factors like the day of the week, seasonal traffic changes, and external events. Planning for these variations by choosing a robust test duration helps ensure that your results reflect true user behavior rather than temporary fluctuations.

Once you’re done with this part, it’s time to press that launch button! 🤓

7. Interpret CRO Testing Results

So, you’ve run your tests and gathered data. The final (and crucial) step in your CRO strategy is to interpret the results.

Analyzing Outcomes Against Hypotheses

Begin your interpretation by evaluating how the test outcomes compare to your initial hypotheses. Determine whether the data supports or contradicts each hypothesis.

If a hypothesis is confirmed, this is a green light to implement similar changes more broadly across your site to leverage the benefit.

Conversely, if a hypothesis is rejected, you’ll need to reassess whether adjustments to the hypothesis are needed or if an entirely different approach should be taken.

Additionally, assess the impact of these changes on your key metrics, such as conversion rates, user engagement, or any other metrics you targeted. This evaluation will help you understand the direct effects of your experiments.

Drawing Conclusions and Developing Insights

Delve deeper into the data to identify patterns and segment-specific responses that may not be immediately apparent. Understanding different responses among various user segments or under different conditions can provide nuanced insights into user behavior and preferences.

Also, consider any external factors that could have influenced your results, such as market trends or concurrent marketing campaigns, which might skew the data.

From this analysis, draw practical, actionable insights that can inform future optimization strategies. For example, if adding testimonials to product pages clearly increased conversions, planning to add similar features to other relevant pages could replicate this success.

Decide which results have uncovered more complex issues that require additional testing and refinement.

Implementing Changes and Future Planning

Once insights are established and conclusions drawn, move forward by implementing successful changes more broadly. Monitor the broader implementation continuously to catch any long-term effects or additional improvements that may be necessary.

Always document all findings, insights, and subsequent decisions to create a knowledge base for your team and inform future tests. This cycle of testing, learning, and applying is vital for ongoing improvement and should be a central part of your CRO strategy.

By focusing on these core elements—evaluating hypotheses, extracting actionable insights, and applying successful changes—you ensure that each testing cycle contributes optimally to improving your site’s conversion rates and user experience.

Avoiding Common CRO Testing Mistakes

CRO testing can yield significant benefits, but common mistakes can undermine the effectiveness of your efforts. So, pay attention before getting your hands dirty and avoid a couple of pitfalls.

Not Defining Clear Objectives

One of the fundamental mistakes is starting a test without clear, well-defined objectives. Without specific goals, it’s challenging to determine whether a test was successful or to draw any meaningful conclusions from the data.

Make sure each test has a clear purpose aligned with broader business goals, and that it targets specific metrics that will indicate success or failure.

Testing Too Many Variables at Once

Another common error is attempting to test too many variables simultaneously without adequate traffic or resources to achieve statistical significance. This scattergun approach can make it difficult to pinpoint which variables actually influenced the outcomes.

Stick to testing a manageable number of changes, ideally through controlled A/B tests or well-planned multivariate tests if the traffic volume supports it.

Ignoring Statistical Significance

Jumping to conclusions without proper statistical analysis is a frequent and critical mistake. Concluding tests too early can lead to decisions based on fluctuations that are just statistical noise rather than actual effect.

Ensure each test is designed with a proper sample size to reach statistical significance, and resist the temptation to end tests prematurely based on initial trends.

Overlooking External Factors

It’s easy to overlook external factors that could influence your test results. Seasonal trends, competitive actions, market shifts, or changes in advertising spend can all skew results if not accounted for.

Analyze the broader context of your test results to ensure that these factors are considered when evaluating outcomes.

Failing to Repeat Successful Tests

Sometimes, what works once might not consistently deliver in different contexts or even over time. It is crucial not to rely solely on a single test’s success but to repeat the test or conduct follow-up tests to validate the findings. This approach helps confirm the reliability of your results and ensures that your optimizations are genuinely effective.

Neglecting User Experience in Pursuit of Conversions

Lastly, focusing too narrowly on conversion metrics without considering the overall user experience can be detrimental in the long term. Tests that sacrifice usability or brand perception for short-term gains often backfire, leading to decreased customer satisfaction and loyalty.

Balance conversion optimization with maintaining a positive, engaging user experience.