Introduction

Join Pathmonk Presents as we explore Deep Media with Ryan Ofman, Head of AI Research. Deep Media pioneers AI-driven deepfake detection, protecting government, social media, and financial sectors from misinformation.

Ryan shares how they engage clients through conferences and viral deepfake detection reports, leveraging their website for education and acquisition. Learn about urgent “earthquake” conversions versus proactive “aftershock” inquiries, the importance of accessible AI explanations, and their work with academics for equitable AI.

Discover tips for creating converting websites with engaging demos and compelling content. Tune in for insights on combating digital threats!

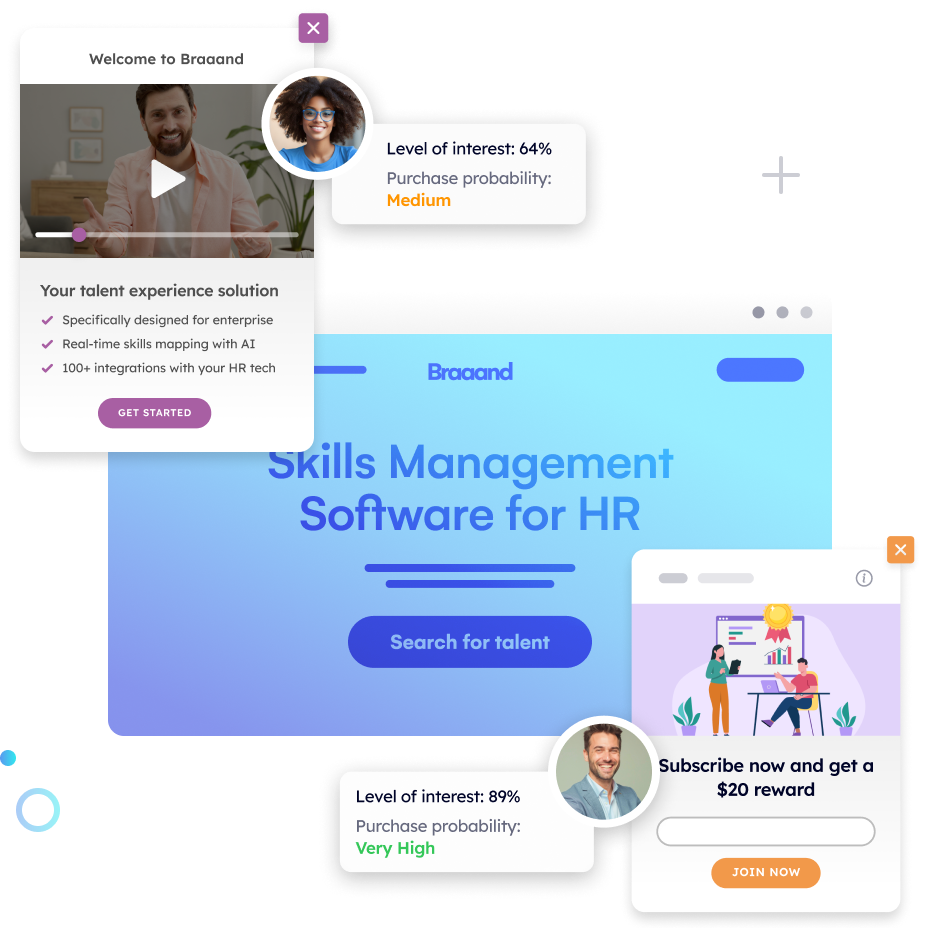

Increase +180% conversions from your website with AI

Get more conversions from your existing traffic by delivering personalized experiences in real time.

- Adapt your website to each visitor’s intent automatically

- Increase conversions without redesigns or dev work

- Turn anonymous traffic into revenue at scale

Kevin: Welcome back to Pathmonk Presents. Pathmonk is the AI for website conversions. With increasing online competition, over 98% of website visitors don’t convert. The ability to successfully show your value proposition and support visitors in their buying journey separates you from the competition.

Pathmonk qualifies and converts leads on your website by figuring out where they are in the buying journey and influencing them in key decision moments with relevant micro-experiences like case studies, intro videos, and much more. Stay relevant to your visitors and increase conversions by 50% by adding Pathmonk to your website in seconds, letting the artificial intelligence do all the work while you keep doing marketing as usual. Check us out on Pathmonk.com.

Hey everybody, welcome back to Pathmonk Presents. Today we’re really excited for our conversation because we’ve got Ryan Offman, Head of AI Research over at Deep Media. Ryan, how are you doing today?

Ryan Ofman: Great. Thanks so much for having us, Kevin.

Kevin: Of course. Really looking forward to hearing what you guys have to tell us about Deep Media. It’s one of the most interesting companies we’ve had on the podcast recently. But before we dive into the nuts and bolts of everything, why don’t you give our audience a little background about what Deep Media is, what you guys do—just tell us all about it.

Ryan Ofman: Absolutely. In 2018, when our founder, Original Gupta, saw the first deepfake video—I’m sure a lot of you saw it—it was this video of Jordan Peele on one side and President Obama on the other side. You could see the perfect matching between what Jordan Peele was saying and how President Obama’s mouth was moving.

As someone who had studied artificial intelligence and machine learning, our CEO knew immediately this kind of tech was going to change the world. It was quite literally that day he went about founding Deep Media. Deep Media has gone through a lot of evolutions in the nearly seven or eight years it’s been around, but the goal has always been clear: how can we use artificial intelligence—the same technologies used to create this kind of content—as a way to safeguard the digital ecosystem against the potential threats of what AI allows bad actors to do? And how can we stay ahead of those activities using that very same technology?

Kevin: That’s a really interesting idea. I definitely saw that same video, and yeah, it was like, “Whoa. This is the direction of the future.” That’s crazy.

I was checking out the website ahead of time and I know you guys work with some really interesting departments and industries. Maybe you can tell us: who are some of the companies, industries, or verticals you’re working with, and what are the problems you’re solving for them?

Ryan Ofman: Absolutely. Now, I’m going to be well-behaved about our NDAs, as I’ve learned to be, but there are three categories of companies that we work with who are interested in deepfake detection for a variety of reasons.

First, we have government end users. We work a lot with the U.S. government to figure out how we can use deepfake detection to protect the digital ecosystem more concretely: getting accurate intelligence, preventing the spread of false intelligence, and ensuring that information being sent out by the government hasn’t been generated by AI.

Second, social media platforms—who have, intentionally or not, become the main distribution points for this kind of misinformation. These companies have done a great job so far—though there’s more to do—in using companies like us to help prevent or at least label AI-generated content. Think about things like Community Notes or the Meta group now requiring labels for AI-generated posts. The tech to figure that out? That’s from companies like Deep Media.

Lastly, financial institutions. We’re seeing growing incidents of deepfake fraud—from fake voice calls requesting bank access to deepfake selfies used in identity verification. Traditional institutions weren’t prepared for these rapid AI advancements, so we help them stay ahead and manage the risk.

Kevin: Awesome answers. I think it’s interesting that you’re working across those three areas. I want to move on and talk about the audience. You’ve identified specific industries, but how are people finding out about you at the moment? Are you doing outbound, SEO, ads—is it mostly referral-based? How do most customers discover Deep Media?

Ryan Ofman: Great question. There are two main avenues: one we thought would be our primary method, and another that actually turned out to be the most effective.

The first is speaking at conferences and events about deepfakes, the state of detection efforts, and our work with government and private companies. That’s been great for exposure, helping people understand the dangers of this tech—even if it’s not impacting them yet.

But the biggest way we’ve gained traction is through deepfake detection reports. When a popular deepfake comes out—Taylor Swift, or the Mexican president photoshopped with El Chapo—we publish detailed detection reports explaining why we believe it’s a deepfake, what our detectors show, and what to look out for. We share them across socials and connect with journalists. What started as a passion project has become a major acquisition channel. People find those reports, check out our site, and dig deeper.

Kevin: That’s so interesting. Every now and then you hear about these viral deepfake moments—like the Obama video, or Taylor Swift, or now the Mexican president one you mentioned, which I hadn’t heard of. If you can insert yourself into the viral conversation with real analysis and tangible evidence, that’s really smart marketing.

Speaking of the website—I’m curious how you use it. Is it more for client acquisition, education, or both? I’d imagine there isn’t huge search volume for deepfake detection, so what’s the role of your site?

Ryan Ofman: Totally. For a service like this, which is new and novel, client acquisition and education go hand in hand. Many of the people who find us are learning about this for the first time. Through education, they realize the problem exists in their workflows, especially as they start using automated tools to process audio, video, and image data.

You’re right—the search volume isn’t massive. We get traffic spikes during big events. And we’ve tried to build the website as both a conversion and education platform. There’s detailed documentation, our blog, and detection reports all linked through the site. Even if people don’t buy immediately, they leave knowing this is a real and growing issue.

Kevin: That makes a lot of sense. So in terms of conversions, are they usually urgent—someone dealing with a deepfake right now—or more proactive, like “we should get ahead of this”?

Ryan Ofman: Great question. I’d break it into two parts: the earthquake and the aftershock.

The earthquake is when someone experiences a deepfake event directly—an exec targeted, stock impact, loss of trust—and they reach out urgently. We solve that, and it often leads to longer-term contracts: “It happened once, it might happen again. Let’s be prepared next time.”

The aftershock happens when a high-profile deepfake hits the news—like the Binance CEO Zoom deepfake. Other companies in the space say, “If it happened to them, it could happen to us,” and they reach out to explore preventative measures. That’s when we start trials and implement proactive strategies.

Kevin: Yeah, makes sense. Having you guys in place beforehand is definitely a better situation than scrambling after the fact.

Now, let’s shift to a new section focused more on you, Ryan. As a marketing professional and Head of AI Research, what in your opinion works best for driving conversions on a website? Any tips or tools you recommend?

Ryan Ofman: Definitely. Two parts here.

First, for AI services, it’s critical to make them accessible. AI can sound complex and intimidating, but it’s not rocket science to understand how it works. Maybe building it is—but explaining it shouldn’t be. For example, we say our model learns just like a human: through repetition and exposure to thousands of examples. It demystifies the tech.

Second, demos and interactive tools are vital. Let people upload content, click around, and understand how it works firsthand. That way, it’s not “Let me pass this off to the tech team,” but rather, “Hey, I get this.”

We also focus on making our website educational and interesting, with talks, blogs, and real use cases. Even if someone doesn’t have an urgent need, they can explore and learn, which keeps them engaged and builds trust.

Kevin: Totally agree—especially as Pathmonk is also an AI tool. Making content actually interesting and tailored to the right audience is key.

Next, what does a typical day look like for you?

Ryan Ofman: One of my favorite things about Deep Media is wearing many hats.

I usually start with engineering—setting up AI models, refining documentation, or updating the site. Then I shift to marketing: checking in with clients, collecting feedback, and understanding their needs.

Lately, we’ve been working closely with academics in deepfake detection—studying fairness, accuracy across skin tones, genders, and more. It’s important work, especially as these models scale.

And finally, I spend time on new tech. Whether it’s age detection, race/gender identification, or new media models, we’re always building the next big thing. That’s what brought me to this role in the first place.

Kevin: Busy day—and sounds like you love it! Where do you go to learn and grow as a marketer or AI professional?

Ryan Ofman: YouTube is huge. I follow creators who explain AI concepts accessibly. I don’t want the most technical explanations—I want clarity.

Second, GitHub. Especially in deepfake generation, you see cutting-edge work and open-source contributions. It’s inspiring.

And finally, Google Alerts—for deepfake detection, generative AI, etc. It helps me keep tabs on real-world applications and news, though with a grain of salt depending on the source.

Kevin: Agree 100%. YouTube is great for visual learners, and GitHub is a goldmine. I also love your point—there are more ways than ever to learn AI now, no matter your learning style.

Let’s wrap up with some rapid-fire questions.

What’s the last book you read?

Ryan Ofman: The Alignment Problem by Brian Christian. Fantastic and accessible book about how AI aligns (or doesn’t) with human values. Highly recommend it.

Kevin: Nice. If there were no limits to tech, what’s the one thing you’d want fixed for your marketing role?

Ryan Ofman: Remove translation barriers. We’re close. If I could work seamlessly with teams in India, Singapore, Japan—who are also dealing with deepfakes—that would be a game changer.

Kevin: Totally. And what’s one repetitive task you’d automate?

Ryan Ofman: Calendar management. The tech exists, but platforms are gatekeeping integrations. We need a universal AI that merges all calendars and optimizes your day.

Kevin: Absolutely. Last one: what’s one piece of advice you’d give your younger self starting out in marketing?

Ryan Ofman: Don’t be afraid to reach out to people who seem “above” you. You have valuable insights. Experience is important, but your perspective matters too. People are surprisingly open—go for it.

Kevin: That’s really insightful. Ryan, thank you so much for joining today. We loved having you on. Before we go, where can people learn more about Deep Media?

Ryan Ofman: Thanks, Kevin. This was awesome. You can find us at deepmedia.ai, and follow us on LinkedIn and Instagram. Next time there’s a deepfake—Google it plus “Deep Media.” We’ve probably analyzed it.

Kevin: Amazing. Thanks again, Ryan. Looking forward to speaking again soon.

Ryan Ofman: Thanks, Kevin.